Invisible Bugs

How great engineers catch the problems no one sees, until it's too late.

In code reviews, the easiest thing to do is verify that something works. You check the logic, run the tests, maybe click through a few UI flows—and if it behaves as expected, you approve. Most code reviews stop at the surface: does it work, do the tests pass, does the feature behave as expected? It’s easy to approve code that looks functional and polished.

But the most important issues in software aren’t obvious at first glance. The real weight of a review lies in catching what doesn’t break now—but will break later. These are the invisible bugs: shortcuts in structure, fragile dependencies, overly clever abstractions, or decisions made without thought for what comes next.

They don’t trigger alerts. Product owners won’t notice. Customers won’t complain. But these choices silently shape your system’s ability to move, scale, and respond to pressure. These are the invisible bugs—architectural shortcuts, brittle patterns, overly clever hacks, or decisions made in isolation without thinking about long-term evolution.

They’re invisible because they don’t raise alarms today. The product owners won’t notice. The customer won’t complain. The dashboard is green. But these decisions quietly shape the future of your system. And when that future demands speed—whether to ship a feature in record time, pivot under pressure, or scale a new product line—those hidden cracks will slow you down or even break you entirely.

Code review isn’t just about surface-level correctness. It’s a time to ask:

- Is this building towards a sustainable system?

- Are we paying off or quietly accruing technical debt?

- Is this a balanced trade-off or just the path of least resistance?

It takes more effort to do this well. You need to understand the current problem and its context in the broader system. You need to zoom out, not just verify the diff. But this is the role of a good reviewer—not just a gatekeeper, but a guardian of long-term velocity.

Writing code is easy. Reviewing code that stands the test of time? That’s the hard part. But it’s also where the impact lies.

In this post, we’ll explore how human reviewers can learn to spot these invisible bugs, ask better questions, and protect the system’s long-term health—not just its short-term correctness.

Table of Contents

- What Are Invisible Bugs?

- The Reviewer’s Role: Beyond the Diff

- The Long-Term Cost of Short-Term Thinking

- Building the Reviewer’s Mindset

- Creating a Culture of Long-Term Thinking

- Patterns That Signal Trouble Early

- AI Code Reviewers and AI-Generated Code

- Practical Review Techniques for Depth and Context

- Conclusion

What Are Invisible Bugs?

Invisible bugs are subtle, structural flaws that don’t cause immediate breakage—but quietly weaken your system over time. They pass tests. They behave correctly in demos. They blend into code that looks clean. But they quietly make your software harder to understand, extend, or operate as it evolves.

These issues don’t raise alarms today, but they’re exactly what slow teams down tomorrow. They take many forms—misplaced logic, tangled dependencies, fragile assumptions—and they creep in when decisions are made without considering how systems will grow, scale, or change.

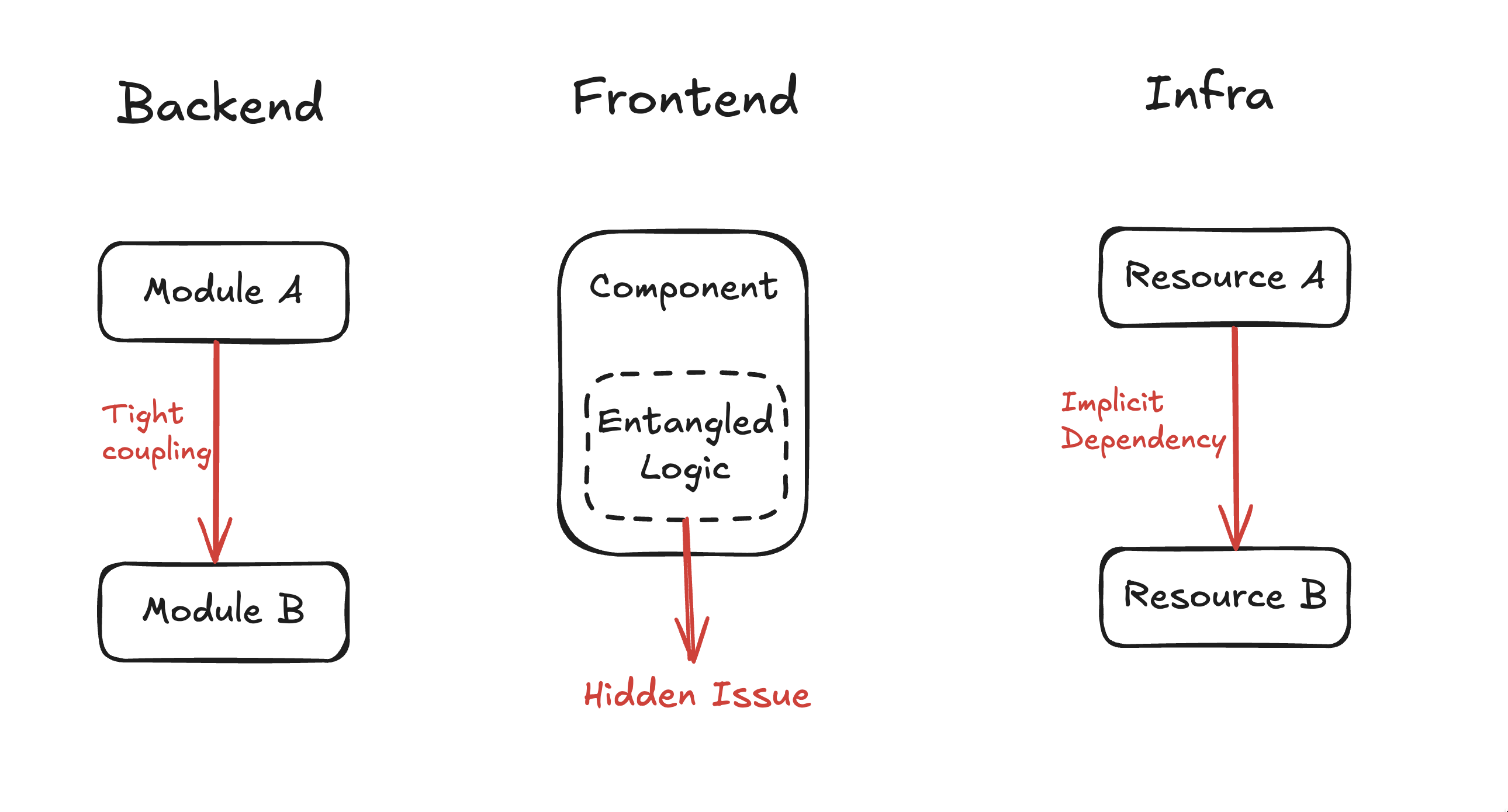

Where Invisible Bugs Hide: Across Every Layer

Invisible bugs aren’t limited to one type of system, they show up everywhere.

In backend systems, invisible bugs show up as tight coupling between modules, leaky abstractions, implicit dependencies, or misuse of design patterns. For example, a service might rely on another service’s internal data structure rather than its public interface. It works now—but the moment one changes, the other breaks. Or a seemingly harmless ORM abstraction may make simple queries wildly inefficient at scale.

In frontend systems, invisible bugs often take the form of bloated components, unclear separation of concerns, or state being passed in unpredictable ways. You might see a feature that works beautifully in isolation but has deeply entangled logic that resists reuse and makes UI state hard to manage across the app. These decisions don’t show up in visual regressions—but they slow future development.

In infra, invisible bugs can stem from scripts or configurations that assume a particular environment setup, hard-coded values, or implicit ordering of resource provisioning. These issues won’t appear in a dev environment but will cause outages or manual work when deploying to staging or production. Over time, these decisions lead to fragile infrastructure that’s difficult to scale or standardize.

Why Don’t They Get Caught Early?

Invisible bugs pass undetected in early stages because they don’t violate correctness in the short term. Tests pass. Features ship. Metrics are stable. Code reviews that focus only on functionality or visual diffs won’t catch them—because the real issues are about structure, future use, and system evolution.

Stakeholders outside engineering often won’t notice these problems either. From their perspective, everything “works.” But these are the exact kinds of issues that quietly turn clean systems into legacy code.

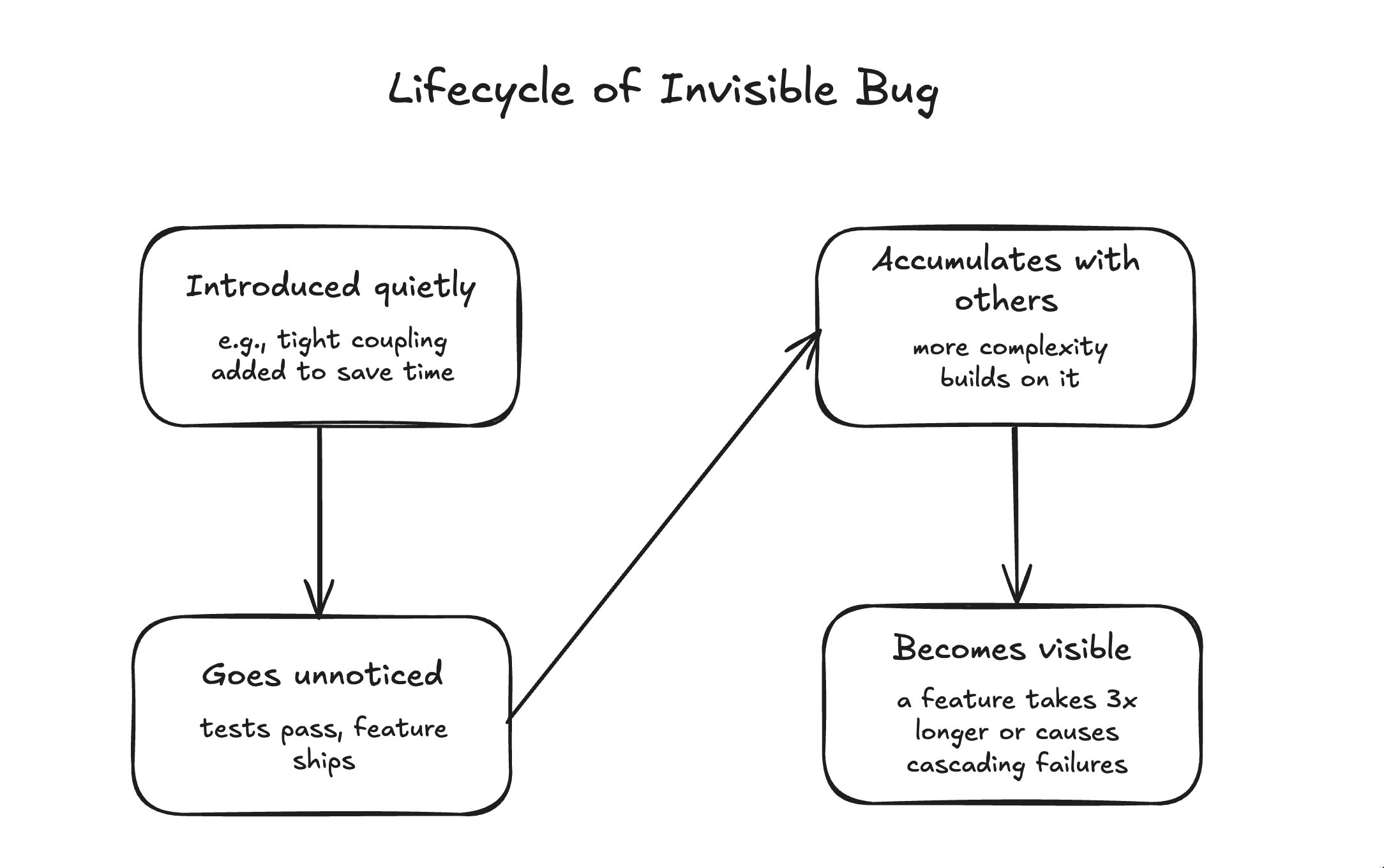

The Cost Compounds Over Time

The cost of invisible bugs compounds. One shortcut leads to another, one hack creates the need for a workaround, and slowly the team finds themselves spending more time navigating the codebase than improving it. Adding features becomes painful. Debugging takes longer. Onboarding new engineers gets harder.

Eventually, the team hits a velocity wall—not because of feature complexity, but because the foundation wasn’t built with care. At that point, the invisible bugs have become very visible—and very expensive.

The Reviewer’s Role: Beyond the Diff

A code reviewer’s job isn’t just to check if the code runs or the tests pass. That’s the starting point, not the finish line. The real value comes when the reviewer looks beyond the diff - beyond what’s changing - to evaluate how well the change fits into the system as a whole.

Good reviewers don’t just check code—they protect the system’s future. They ask the hard questions, look for the hidden costs, and ensure the work done today doesn’t become the problem everyone’s stuck with tomorrow.

Surface-Level Checks vs Structural Thinking

Surface-level checks are easy. Does the code compile? Are there unit tests? Is the naming clear? Is the feature working as expected?

These checks ensure the code works. But they say nothing about whether it fits. That’s where structural thinking begins:

- Does this logic belong here?

- Will this abstraction hold up under new requirements?

- Are we introducing hidden coupling or long-term constraints?

- Does this move us toward—or away from—our system goals?

Structural thinking means stepping back from the code and considering how it affects the overall system. It’s about how components interact, how data flows, how dependencies are managed, and whether the system remains flexible and maintainable as it grows.

Context Is Everything

Great reviewers understand the problem space—not just the change itself.

To review meaningfully, you need more than just local context - you need to understand why the code is being written, how it fits into broader goals, and where the system is going next. Without that awareness, it’s easy to accept a patch that solves today’s problem while creating tomorrow’s bottleneck.

For example - a developer uses a global cache to speed up a query. It works today. But if the architecture is shifting toward multi-tenant deployments or distributed services, that decision could create major issues later. A good reviewer questions whether the optimization makes sense in the direction the system is heading.

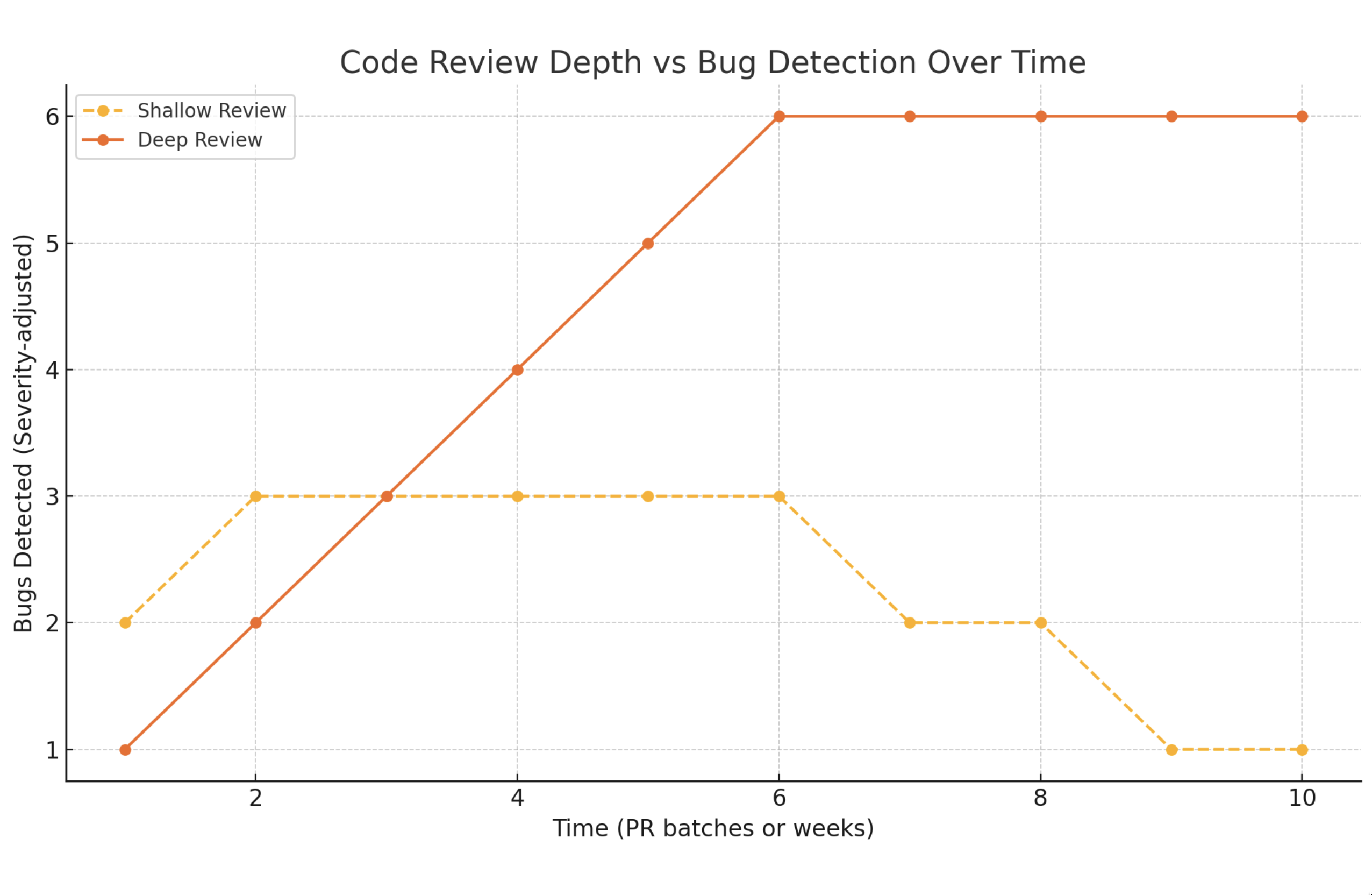

Subtle Mistakes That Pass Shallow Review

Here’s the graph illustrating how code review depth impacts bug detection over time:

- Shallow reviews catch a few bugs early, but plateau quickly—missing more as structural issues compound.

- Deep reviews catch more bugs over time, especially structural ones that would otherwise be invisible until much later.

Here are some subtle issues that often sneak through reviews unless the reviewer is thinking structurally:

- Backend: Adding logic that directly manipulates another module’s internal data instead of going through a clean interface. It “works,” but creates tight coupling that breaks encapsulation.

- Frontend: Using a shared global state or context where localized state would suffice. It’s fast to implement, but makes components interdependent and harder to test or reuse.

- Infrastructure: Writing an IaC module that depends on implicit resource ordering or assumes a specific region or environment config. It works in staging, but silently fails when deployed elsewhere.

Each of these might pass a standard code review focused on correctness and functionality check. But if you zoom out, they’re silent liabilities—choices that quietly reduce system flexibility.

The Long-Term Cost of Short-Term Thinking

Invisible bugs often begin as small compromises—quick fixes, one-off solutions, or clever hacks made to hit a deadline. In the moment, they seem harmless. The code works. The feature ships. Everyone moves on.

According to Stripe’s Developer Report, 42% of developer time is spent dealing with tech debt. Of that, a significant portion comes from poor past decisions—not missing features.

These choices can feel justified in the moment—especially under delivery pressure—but they come at a price that teams usually pay later, and often with interest. These shortcuts are rarely neutral. They often come at a cost—and that cost is usually paid later, when speed matters most.

How Invisible Bugs Slow Down Teams Later

The biggest cost in software is not building it, it’s maintaining it. - Martin Fowler

At first, these structural issues remain hidden. The product ships. Metrics look fine. But over time, the weight starts to build:

- New features take longer to implement because the underlying architecture is hard to extend.

- Bugs become harder to diagnose because logic is spread across unrelated parts of the system.

- Onboarding slows down because no one can confidently explain how or why certain pieces were built the way they were.

- Teams spend more time maintaining workarounds than delivering new value.

Eventually, engineering velocity drops—not because the problems are technically harder, but because the system resists change. What was once an enabler becomes a drag.

Strategic Debt vs Invisible Debt

Not all shortcuts are bad. Sometimes, taking on strategic debt—making a conscious, documented trade-off—is necessary to move fast. What matters is how it’s taken on.

- Strategic debt is a calculated compromise with a clear plan for resolution. It’s logged, owned, and revisited.

- Invisible debt from invisible bugs is often unintentional. It builds in silence, buried in code that looks clean and functional on the surface.

The problem isn’t debt itself—it’s debt without awareness and lack of visibility. And invisible bugs are the most dangerous form of that, because you don’t know you owe anything until it’s too late.

Short-term thinking isn’t just about today’s code—it’s about tomorrow’s problems. A good reviewer sees beyond the immediate task and protects the system from choices that will limit its ability to grow. Because in the long run, nothing is more expensive than rebuilding what you rushed through the first time.

Imagine a growing startup that built a backend API quickly to validate its first product. The team hard-coded business rules directly into the API layer, avoided clear domain boundaries, and leaned heavily on a single shared database model. It worked great—fast to build, fast to demo, fast to iterate.

Two years later, that same API is supporting three products, dozens of engineers, and real customer data. Every new feature feels like a minefield. A small change in one module breaks behavior in another. No one wants to touch the legacy code. Eventually, they decide to rewrite large portions of the backend—costing months of time, slowing product delivery, and burning out engineers.

The original developers didn’t do anything “wrong”—they did what was needed at the time. But without thoughtful reviews, no one called out the invisible bugs early. And the cost of those hidden decisions came due when the company needed to scale fast.

Building the Reviewer’s Mindset

Strong code reviews don’t happen by chance—they come from a mindset rooted in ownership, context, and care for the long-term health of the system. Reviewing code is more than just checking boxes for functionality or style—it’s about being a second brain that tests not just what was built, but how and why it was built that way.

If you’re also interested in improving how you communicate during code review—tone, clarity, empathy - I’ve written about that in UX Notes for the Code Review Process. This post complements it by focusing on what to look for when protecting long-term system health.

This mindset is what separates someone who approves code from someone who protects the system.

What to Look for Beyond Correctness

Correctness is table stakes. Yes, the code should work. Yes, the tests should pass. But correctness only tells you that the code does what it claims to do—not whether it’s a good addition to the system.

A strong reviewer looks for:

- Is the logic in the right place?

- Are responsibilities clearly separated?

- Is the intent obvious, or buried?

- Can someone else build on this safely in six months?

- Are we aligning with or drifting from our system design?

These are harder to see than syntax errors or broken tests, but they’re what separate superficial reviews from impactful ones.

Building the reviewer’s mindset is about developing a sense of responsibility for the system, not just the code. It’s about caring enough to ask hard questions, dig beneath the surface, and help shape software that isn’t just functional today—but resilient tomorrow.

Questions Reviewers Should Consistently Ask

The best reviewers aren’t just spotting problems—they’re asking questions that challenge assumptions and expose risk. Here are a few they should return to often:

- Does this abstraction simplify future work or obscure it?

- Are we solving the right problem, or just patching symptoms?

- What assumptions does this code make about its environment?

- If we had to make this system multi-tenant, multi-region, or multi-team—would this still work?

- Is this pattern scalable, or is it just working by accident today?

- Would I feel confident debugging this if it broke six months from now?

Asking questions like these forces both reviewer and author to think deeply—not just about what is being done, but about what it means for the system over time.

The Balance Between Perfection and Pragmatism

It’s easy to fall into two traps: approving too quickly because “it works,” or blocking endlessly in pursuit of some ideal future state. Great reviewers avoid both.

The key is understanding the context of the change:

- Is this part of a critical core system, or a temporary migration?

- Is the shortcut documented and time-bounded, or buried and forgotten?

- Is there a meaningful reason to delay this now, or can we ship with eyes open and a follow-up plan?

Perfection isn’t the goal—awareness is. If a compromise is made, it should be visible, intentional, and discussed. Not all tech debt is bad, but all hidden tech debt is dangerous.

Creating a Culture of Long-Term Thinking

Catching invisible bugs and making thoughtful trade-offs can’t just be the job of a few senior engineers. To truly build resilient systems, the entire team needs to adopt a culture where long-term thinking is the default—baked into everyday workflows, not just aspirational slide decks.

This culture doesn’t emerge through process alone—it grows from shared values, language, and habits. It means creating space in the team’s day-to-day to think critically, challenge decisions respectfully, and document not just what you’re building, but why.

Normalize Catching Structural Issues Early

Invisible bugs thrive in environments where speed is the only goal, and “it works” is the only measure of success. To shift this, teams need to make structural thinking an everyday habit.

- Celebrate depth, not just speed.

Celebrate when someone catches a subtle architectural issue, not just when they ship fast. This sets the tone for what’s valued. - Make system ownership visible.

Assign reviewers who go beyond correctness—who ask how this code shapes the system. - Protect time for real reviews.

If reviewing is a chore squeezed between tickets, structural problems will always be missed. - Include near-misses in retros.

When something almost broke or became painful, ask: was the root cause a structural blind spot?

When catching invisible bugs is a recognized part of the engineering craft, everyone feels responsible for quality—not just the author or a tech lead.

Encouraging Developers to Document Intent

One of the most powerful ways to fight invisible bugs is to surface intent. Invisible bugs often stem from decisions made in isolation—without clarity on the problem being solved or the trade-offs involved.

Long-term thinking doesn’t slow teams down—it keeps them fast when it matters most. By making structural quality a shared responsibility, and treating every review as a design conversation, teams create systems that scale not just in usage—but in time.

Encourage developers to:

- Write PR descriptions that explain reasoning, not just implementation. “What’s changing?” is useful. “Why this approach?” is essential.

- Add inline comments that clarify intent for non-obvious logic or structural decisions.

- Capture open questions or uncertainties in the review itself, making room for discussion instead of false certainty.

- Link to relevant design docs, discussions, or prior decisions to anchor the review in context.

Intent turns code from a static artifact into part of a living system. It gives reviewers something to reason about, not just verify.

Make Trade-Offs Explicit in Review

Not every decision needs to be perfect. All engineering is trade-offs. The danger isn’t in making them—it’s in hiding them.

If you’re shipping a quick fix, say so.

If you’re duplicating logic to unblock something, note that.

If you’re planning to refactor later, log a TODO or open an issue.

When trade-offs are explicit, they can be discussed, challenged, improved, or accepted knowingly. When they’re implicit, they fester into invisible bugs.

The review process is the perfect place to have these discussions. By treating every PR as an opportunity to align not just on code, but on approach, teams grow stronger systems—and stronger engineers.

Patterns That Signal Trouble Early

Invisible bugs are hard to spot directly—but they leave behind patterns. You can’t always see the long-term cost in the moment, but you can detect signals that something might not scale, evolve, or play well with the rest of the system.

These aren’t just “bad code smells.” They’re early warning signs that the system is drifting into fragility. A good reviewer trains their eye for these signals—and helps the team steer away from them while it’s still easy to course-correct.

Spotting these signals early can prevent a lot of rework, wasted effort, or system rewrites down the line.

Code Smells and Decision Smells

Code smells are patterns in code that may not be bugs now but suggest deeper design issues—like duplication, unclear boundaries, or inappropriate abstraction.

Decision smells are more subtle: signs that the underlying choices behind the code may not hold up as the system evolves. These include rushed trade-offs, unclear intent, or inconsistent reasoning.

Both types of smells are worth paying attention to, especially during reviews.

Common Structural Smells That Signal Risk

Here are six patterns that often lead to long-term issues:

1. Premature Abstraction

Creating layers of abstraction before there’s real variation in behavior. This leads to unnecessary indirection and cognitive load, especially when the abstraction has only one implementation.

Smell: “This abstraction is reusable—but there’s only one use.”

2. Hard-Coded Assumptions

Environment details, magic numbers, or region-specific logic baked into core code.

Smell: “This will break if we add another region/customer/feature.”

3. Leaky Boundaries

Crossing module boundaries without clear interfaces—directly calling into other services’ internals or accessing deeply nested shared state.

Smell: “Changing one thing here requires edits in multiple unrelated places.”

4. Clever or Compressed Logic

Code that “works” but is difficult to understand without deep context. Often optimized for brevity or elegance over clarity.

Smell: “This looks smart—but no one else wants to touch it.”

5. Repeated Patterns with Slight Variation

Repeated code that slightly differs across files or components—usually a sign of missing abstraction or inconsistent understanding of requirements.

Smell: “Why does the same thing happen three different ways?”

6. Tightly Coupled Tests

Tests that rely on internal state, implementation details, or fragile setups rather than behavior and contracts. They make refactoring risky and brittle.

Smell: “Tests break often, not because behavior changed—but because the internals did.”

Raising These Patterns Constructively

Flagging these issues in review isn’t about nitpicking—it’s about protecting your future speed. The key is to frame concerns in a way that opens up conversation, not shuts it down.

-

Ask, don’t accuse:

“Curious—was there a reason this was abstracted now instead of waiting?” -

Share context or history:

“In our billing flow, we had a similar shortcut. It slowed us down later when we needed to scale it.” -

Offer options, not ultimatums:

“Would extracting a small helper here improve reuse without overcomplicating?” -

Focus on the system, not the person:

The goal is to strengthen architecture—not to critique the author.

Being able to spot structural smells early is one of the most valuable reviewer skills. It prevents fragile code from becoming a foundation others are forced to build on. The earlier you catch it, the easier (and cheaper) it is to fix.

AI Code Reviewers and AI-Generated Code

As AI-assisted reviews become more common, the role of human engineers in code review is evolving. Modern AI tools are increasingly good at-

- Enforcing syntax and style

- Detecting obvious bugs

- Generating boilerplate or repetitive logic

- Even suggesting refactors or improvements

But they still lack what matters most for reviewing the shape of a system:

- Deep context of the business domain

- Awareness of long-term architectural goals

- Judgment about trade-offs under shifting requirements

- Intuition about what will be maintainable six months—or six teams—from now

GitHub’s Octoverse 2023 report notes over 30% of new code on their platform is AI-assisted. That number will grow—and so will the need for deeper human judgment.

AI can catch typos. It can even suggest patterns. But it doesn’t understand when a clever shortcut is dangerous or why one abstraction may age better than another.

AI Code Needs Even Sharper Review

Ironically, AI-generated code often needs more careful review, not less. It may be syntactically valid and even logically correct—but structurally fragile or semantically disconnected from the system’s intent. That’s where invisible bugs can thrive.

Human reviewers must take on a higher-order role: not just checking what the AI did, but assessing whether the decision makes sense in context.

Human Judgment Remains the Differentiator

If AI is accelerating the speed of software creation, then thoughtful human review becomes the throttle—the balancing force that ensures that speed doesn’t outpace stability.

Invisible bugs aren’t going away. In fact, in a world of machine-generated code, they may multiply faster. The need for human engineers to step back, ask the hard questions, and guard the system’s evolution is more important than ever.

Practical Review Techniques for Depth and Context

Thoughtful reviews don’t come from gut feeling or experience alone—they’re built on habits that help you consistently surface hidden risks, understand the bigger picture, and offer meaningful feedback. To review for long-term quality, you have to go beyond just reading code—you have to engage with its purpose, structure, and impact.

Look Beyond the Code Itself

A solid diff can still be a poor change if it doesn’t fit into the architecture or contradicts long-term design goals. Great reviewers routinely ask:

- How does this fit into the broader system?

Is this reinforcing the current architecture or working around it? - Is this aligned with how we’ve solved similar problems before?

Is this consistent with patterns the team has already committed to? - Does this code match the intent of the ticket or feature?

Code that’s technically correct but misaligned with the product direction can be just as damaging as broken logic. - Does this change improve or degrade maintainability?

Would someone else be able to build on top of this comfortably?

A review should consider how well the code fits into the ongoing story of the system—not just how well it performs its role today. Even great code can be wrong for the system. Review the shape, not just the syntax.

When Context Is Missing, Ask for It

One of the most underused powers in code review is simply asking:

“Can you help me understand the reasoning behind this approach?”

If you don’t have enough context to evaluate a change structurally, say so. Good engineers welcome these questions—it signals that you’re engaged, not obstructive.

Ask when things aren’t clear:

- What’s the problem this is solving?

- Were other options considered? Why was this one chosen?

- How is this expected to evolve over time?

- Is this temporary, or a long-term part of the system?

If intent isn’t documented in the PR, ask the author to include it. Good teams normalize this as a healthy part of the review process, not as nitpicking.

Know When to Block, Advise, or Escalate

Effective reviewers aren’t just guardians of code—they’re collaborators in system design. They know how to dig into intent, ask the right questions, and raise flags where necessary. With the right habits and mindset, you can turn code reviews into a tool for architectural health, technical growth, and long-term velocity.

Not all concerns are created equal—and not all reviews should be hill-to-die-on moments. The impact of your feedback should match the weight of the issue.

Block when:

- It introduces architectural fragility or hidden coupling

- It adds irreversible complexity or debt without discussion

- It breaks assumptions that other parts of the system rely on

- It lacks test coverage for critical behavior or edge cases

Advise when:

- The solution works, but isn’t ideal or idiomatic

- There are better alternatives, but no clear immediate risk

- You’re unsure but want to surface a point for discussion

Escalate when:

- The change carries high system-wide impact and lacks design review

- There’s disagreement on direction that affects future architecture

- The trade-offs go beyond code and need alignment with leads or stakeholders

The goal isn’t to win arguments—it’s to make trade-offs visible, and ensure everyone is working with full awareness of what’s at stake. Blocking should be reserved for real risks. Advising should be frequent and constructive. Escalation should be rare—but deliberate and professional when needed.

Conclusion

Invisible bugs don’t crash your app. They crash your momentum.

They don’t show up in tests or dashboards. They show up later—when you’re racing to ship a feature and can’t move fast because your system won’t let you. The code works. But the structure is rigid, brittle, or confusing. And that invisible complexity? It’s costing you time, energy, and trust.

Reviewing for Today and Tomorrow

A good review ensures the code does what it’s supposed to. A great review goes further—it asks whether the change strengthens or weakens the system in the long run. It questions structure, intent, and fit. It ensures the foundations being laid today won’t become barriers tomorrow.

Reviewers who think beyond the diff act as stewards of the architecture, not just validators of syntax. They help the team write code that stays valuable, not just code that compiles.

Thoughtful Code Review Is a Core Engineering Skill

Long-term impact isn’t always glamorous. It won’t get noticed in a sprint demo. But it is what separates high-functioning engineering teams from those constantly stuck firefighting their past.

The ability to review code deeply—contextually, structurally, and critically—is a mark of engineering maturity. It’s how you move from being a contributor to being a builder of systems that last.

Good code review isn’t a formality. It’s engineering leadership in action.

Final Thought: Speed Isn’t Just Shipping Fast

In software, we often talk about velocity as how quickly you can build. But true velocity is how quickly you can keep building—without tripping over your past.

Invisible bugs slow you down at the worst possible moment: when you need to move fast. Thoughtful code review prevents this. It gives your team the power to scale confidently, evolve safely, and deliver with trust.

So the next time you review code, zoom out. Think about not just what this code is doing—but what it’s enabling. Today’s decisions are tomorrow’s constraints—or catalysts. Make them count.